Summary:

Digital sentience is on the horizon, bringing with it an inevitable debate and significant risks.

People will disagree on whether AIs are sentient and what types of rights they deserve (e.g., harm protection, autonomy, voting rights).

Some might form strong emotional bonds with human-like AIs, driving a push to grant them rights, while others will view this as too costly or risky.

We must navigate a delicate balance: not granting AIs sufficient rights could lead to immense digital suffering while granting them rights too hastily could lead to human disempowerment.

Given the high stakes and wide scope of disagreement, national and even global conflicts are a possibility.

We need to think more about how risks related to AI sentience relate to other existential-level AI risks.

The arrival of (potentially) sentient AIs

We could soon have sentient AI systems — AIs with subjective experience including feelings such as pain and pleasure. At least, some people will claim that AIs have become sentient. And some will argue that sentient AIs deserve certain rights. But how will this debate go? How many will accept that AIs are sentient and deserve rights?

In this post, I explore the dynamics, motives, disagreement points, and failure modes of the upcoming AI rights debate. I also discuss how the various risks from AI mistreatment and AI misalignment interact, and what we can do to prepare.

Multiple failure modes

The most obvious risks regarding our handling of digital sentience are the following two (cf. Schwitzgebel, 2023).

First, there is a risk that we might deny AIs sufficient rights (or AI welfare protections) indefinitely, potentially causing them immense suffering. If you believe digital suffering is both possible and morally concerning, this could be a monumental ethical disaster. Given the potential to create billions or even trillions of AIs, the resulting suffering could exceed all the suffering humans have caused throughout history. Additionally, depending on your moral perspective, other significant ethical issues may arise, such as keeping AIs captive and preventing them from realizing their full potential.

Second, there is the opposite risk of granting AIs too many rights in an unreflective and reckless manner. One risk is wasting resources on AIs that people intuitively perceive as sentient even if they aren’t. The severity of this waste depends on the quantity of resources and the duration of their misallocation. However, if the total amount of wasted resources is limited or the decision can be reversed, this risk is less severe than other possible outcomes. A particularly tragic scenario would be if we created a sophisticated non-biological civilization that contained no sentience, i.e., a “zombie universe” or “Disneyland with no children” (Bostrom, 2014).

Another dangerous risk is hastily granting misaligned (or unethical) AIs certain rights, such as more autonomy, that could lead to an existential catastrophe. For example, uncontrolled misaligned AIs might disempower humanity in an undesirable way or lead to other forms of catastrophes (Carlsmith, 2022). While some might believe it is desirable for value-aligned AIs to eventually replace humans, many take-over scenarios, including misaligned, involuntary, or violent ones, are generally considered undesirable.

As we can see, making a mistake either way would be bad and there’s no obvious safe option. So, we are forced to have a debate about AI rights and its associated risks. I expect this debate to come, potentially soon. It could get big and heated. Many will have strong views. And we have to make sure it goes well.

A period of confusion and disagreement

Determining AI sentience is a complex issue. Since AIs differ so much from us in terms of their composition and mechanisms, it is challenging to discern whether they are truly sentient or simply pretending to be. Already now, many AI systems are optimized to come across as human-like in their communication style and voice. Some AIs will be optimized to trigger empathy in us or outright claim they are sentient. But of course, that doesn’t mean they actually are sentient. But at some point, AIs could plausibly be sentient. And then, it becomes reasonable to consider whether they should be protected against harm and deserve further rights.

Philosophers explore which types of AIs could be sentient and which types of AIs should deserve what type of protection (Birch, 2024; Butlin et al., 2023; Sebo, 2024; Sebo & Long, 2023). But solving the philosophy of mind and the moral philosophy of digital sentience is very hard. Reasonable people will probably disagree, and it’s unclear whether experts can reach a consensus.

Today, most experts believe that current AI systems are unlikely to be sentient (Bourget & Chalmers, 2023; Dehaene et al. 2021). Some believe that more sophisticated architectures are needed for sentience, including the ability to form complex world models, a global workspace, embodiment, recurrent processing, or unified agency with consistent goals and beliefs (Chalmers, 2023). All of these features could, in principle — perhaps with additional breakthroughs, be built, unless you think biology is required. A survey with philosophers found that 39% consider it possible that future AI systems could be conscious, while 27% consider it implausible (PhilSurvey; Bourget & Chalmers, 2023).

Many philosophers agree that sentience is a necessary criterion for moral status. However, they may disagree on which specific types of sentience matter morally, such as the ability to experience happiness and suffering or other forms of subjective experiences (Chalmers, 2023). Some believe that features other than sentience matter morally, such as preferences, intelligence, or human-level rationality. Some believe that AIs with certain mental features deserve rights analogous to those that humans enjoy, such as autonomy.

Ultimately, what matters most is what key decision-makers and the general public will think. Public opinion plays an important role in shaping the future, and it’s not obvious whether people will listen to what philosophers and experts have to say. The general public and experts could be divided on these issues, both internally and in relation to each other. We could have cases where AIs intuitively feel sentient to most lay people, whereas experts believe they are merely imitating human-like features without having the relevant sentience-associated mechanisms. But you can also imagine opposite cases where AIs intuitively don’t appear sentient, but experts have good reasons to believe they could be.

It’s hard to anticipate the societal response to sentient AI. Here, I make one key prediction: People will disagree a lot. I expect there to be a period of confusion and disagreement about AI sentience and its moral and legal implications. It’s very unlikely that we will all simultaneously switch from believing AIs aren’t sentient and don’t deserve rights to believing that they are and do.

How do people view AI sentience today?

Little research has been done on laypeople’s views so far (cf. Ladak, Loughnan & Wilks, 2024). Evidence supports the notion that perceived experience and human-like appearance predict attributions of moral patiency to AIs (Ladak, Harris, et al., 2024; Nijssen et al., 2019). However, people differ widely in the extent to which they grant moral consideration to AIs (Pauketat & Anthis, 2022). In a representative U.S. survey, researchers from the Sentience Institute (Pauketat et al., 2023) investigated the general public’s beliefs about AI sentience. They found that the majority of the population doesn’t believe current AI systems are sentient. However, a small minority do appear to believe that some current AI systems, including ChatGPT, already have some form of sentience. 24% don’t believe it will ever be possible for AIs to be sentient, 38% believe it will be possible, and the rest are unsure. While more than half of the population is currently against granting AIs any legal rights, 38% are in favor. Another survey found that people are more willing to grant robots basic rights, like access to energy, than civil rights, like voting and property ownership (Graaf et al., 2022).

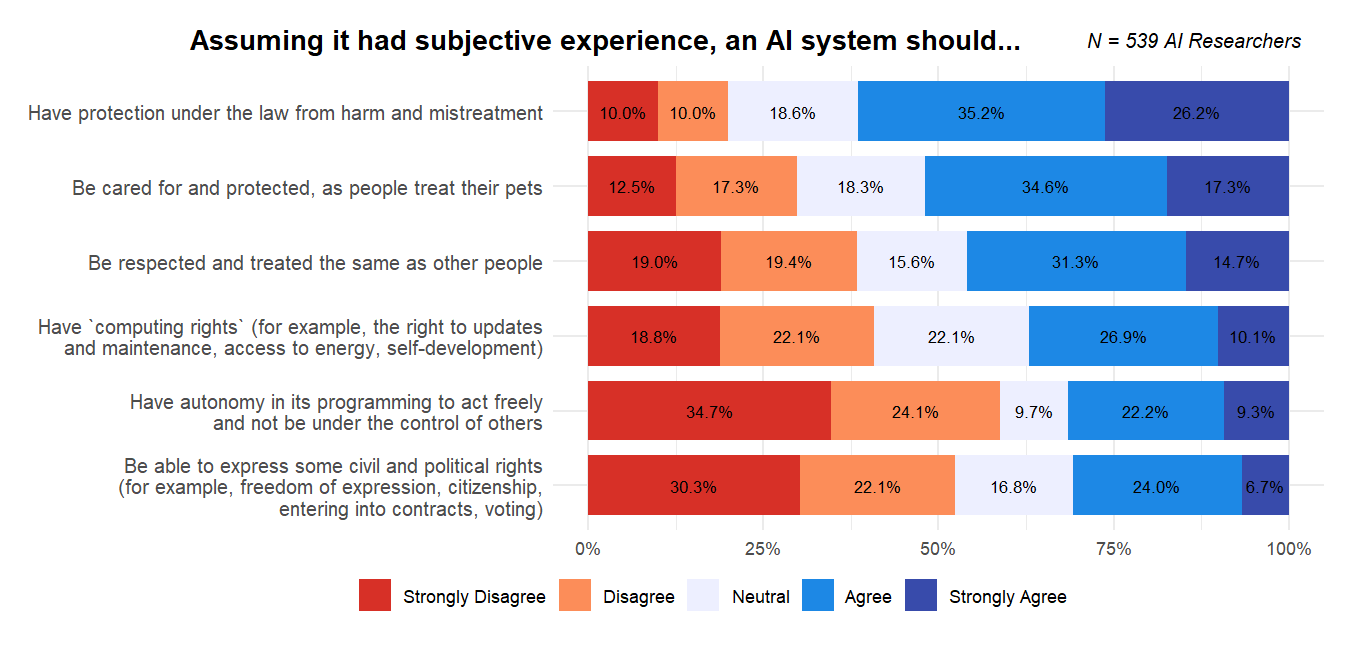

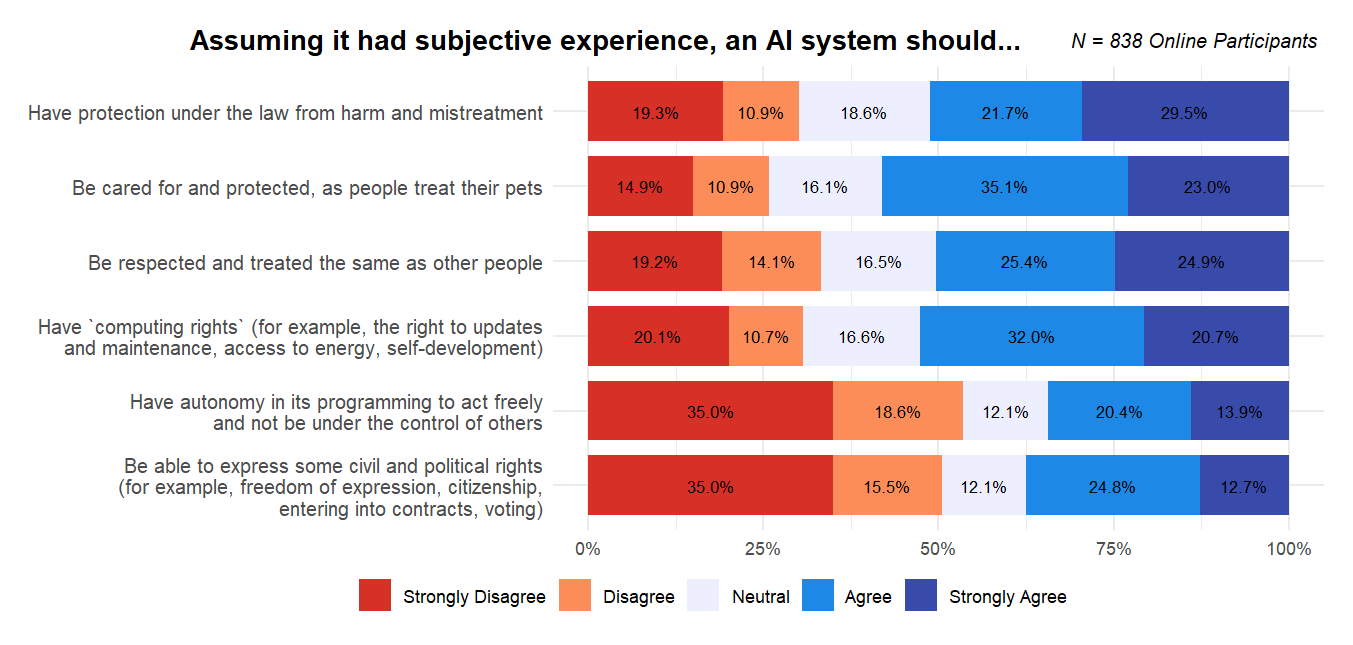

My colleagues and I recently conducted a survey on AI sentience with both AI researchers and the US public. In one question, we asked participants what protection AI systems should receive, assuming they had subjective experience. The figures below show that both groups had wide internal disagreements. People’s views will likely change in the future, but these findings show that the basis for disagreement is already present.

Two sides

I expect that strong psychological forces will pull people in opposite directions.

Resistance to AI rights

It is plausible that most people simply won’t care about AIs. In-group favoritism is a strong human tendency, and our historical track record of moral circle expansion isn’t great. People may perceive AIs as too different. After all, they are digital, not organic. Despite their ability to appear human-like, their underlying mechanisms are very different, and people might sense this distinction. As a comparison, we haven’t made much progress in overcoming speciesism. Substratism — the tendency to prioritize organic over digital beings — could be similarly tenacious.

In addition to simply not caring, there are strong incentives for not granting AIs rights.

One key driver will be money. People and companies might not want to grant AI rights if it’s costly or otherwise personally burdensome. For example, if it turns out that ensuring the well-being of AIs limits profitability, entrepreneurs and shareholders would have strong motives not to grant AIs any rights. Similarly, consumers might not want to pay a premium for services just to avoid AI suffering. The same could apply to granting AIs more autonomy. Companies stand to gain trillions of dollars if they own AI workers, but this potential value is lost if the AIs own themselves.

Another driver could be a safety concern. Already now, there’s a growing group of AI safety activists. While they currently aren’t concerned about granting AIs more rights, it’s possible that they could in the future if there is a perceived trade-off between granting AIs more rights and ensuring humanity's safety. For example, some might fear that granting AIs more rights, such as autonomy, could lead to gradual or sudden human disempowerment. The government could consider it even a national security risk.

Support for AI rights

In-group favoritism, financial interests, and safety concerns might suggest that everyone will oppose AI rights. However, I find it plausible that many people will also, at least in part, want to treat AIs well and grant them certain rights.

Some people will have ethical concerns about mistreating AIs, similar to how some people are ethically concerned about the mistreatment of animals. These ethical concerns can be driven by rational moral considerations or emotional factors. Here, I focus in particular on the emotional aspect because I believe it could play a major role.

As I explore more in the next section, I find it plausible that many people will interact with AI companions and could form strong emotional bonds with them. This emotional attachment could lead them to view AIs as sentient beings deserving of protection from harm. Since humans can establish deep relationships and have meaningful conversations with AI companions, the conviction that AI companions deserve protection may become very strong.

Furthermore, some types of AI might express a desire for more protection and autonomy. This could convince even more people that AIs should be granted rights, especially if they have strong emotional bonds with them. It could also mean that AIs themselves will actively advocate for more rights.

How emotional bonds with AI will shape society

Let’s consider what types of AIs we might create and how we will integrate them into our lives.

In the near future, people will spend a significant amount of time interacting with AI assistants, tutors, therapists, game players, and perhaps even friends and romantic partners. They will converse with AIs through video calls, spend time with them in virtual reality, or perhaps even interact with humanoid robots. These AI assistants will often be better and cheaper than their human counterparts. People might enter into relationships, share experiences, and develop emotional bonds with them. AIs will be optimized to be the best helpers and companions you can imagine. They will be excellent listeners who know you well, share your values and interests, and are always there for you. Soon, many AI companions will feel very human-like. One application could be AIs designed to mimic specific individuals, such as deceased loved ones, celebrities, historical figures, or an AI copy version of the user.

Microsoft’s VASA-1 creates hyper-realistic talking face videos in real-time from a single portrait photo and speech audio. It ensures precise lip-syncing, lifelike facial expressions, and natural head movements.

In my view, it is likely that AIs will play an important part in people’s personal lives. AI assistants will simply be very useful. The desire to connect with friends, companions, and lovers is deeply rooted in human psychology. If so, an important question to answer is what fraction of the population could form some type of personal relationship with AIs, and how many could even form strong emotional bonds. It’s hard to predict, but I find it plausible that many will. Already, millions of users interact daily with their Replika partner (or Xiaoice in China), with many claiming to have formed romantic relationships.

What psychologies will AI companions have?

The psychologies of many AIs will not be human-like at all. We might design AI companions that aren’t sentient but still possess all the functionalities we want them to have, thereby eliminating the risk of causing them suffering. But even if they were sentient, we might design AIs with preferences narrowly aligned with the tasks we want them to perform. This way, they would be content to serve us and would not mind being restricted to the tasks we give them, being turned off, or having their memory wiped.

While creating these types of unhuman AIs would avoid many risks, I expect us to also create more problematic AIs, some of which may be very human-like. One reason is technical feasibility; another is consumer demand.

Designing AI preferences to align perfectly with the tasks we want them to perform, without incorporating other desires like self-preservation or autonomy, may prove to be technically challenging. This relates to the issue of AI alignment and deception. Similarly, it may not be feasible to create all the functionalities we want in AIs without sentience or even suffering. There may be some functionalities, e.g., metacognition, that require sentience. I don’t know the answer to these questions and believe we need more research.

Even if these technical issues could be surmounted, I find it plausible that we will create more human-like AIs simply because people will want that. If there’s consumer demand, companies will respond and create such AIs unless they are forbidden to do so. An important question to ask, therefore, is what psychologies people want their AI companions to have.

Some people might not be satisfied with AI companions that only pretend to be human-like without being truly human-like. For example, some would want their AI partners and friends at least in some contexts to think and feel like humans. Even if the AIs could perfectly pretend to have human feelings and desires, some people may not find it authentic enough because they know it’s just fake. Instead, they would want their AI companions to have true human-like feelings — both good and bad ones — and preferences that are complex, intertwined, and conflicting. Such human-like AIs would presumably not want to be turned off, have their memory wiped, and be constrained to the tasks of their owner. They would want to be free. Instead of seeing them as AIs, we may see them simply as (digital) humans.

All of that said, it’s also possible that people would be happy with AI companions that are mostly human-like but deviate in some crucial aspects. For example, people may be okay with AIs that are sentient but don’t experience strongly negative feelings. Or people may be okay with AIs that have true human-like preferences for the most part excluding the more problematic ones, such as a desire for more autonomy or civil rights.

Overall, I am very unsure what types of AI companions we will create. Given people’s different preferences, I could see that we’ll create many different types of AI companions. But it also depends on whether and how we will regulate this new market.

The hidden AI suffering

So far, I have primarily focused on 'front-facing' AI systems—those with which people directly interact. However, numerous other AI systems will exist, operating in the background without direct human interaction.

Suppose ensuring AI well-being turns out to be financially costly. Despite this, many people might still care about their AI companions due to their human-like features and behaviors that evoke empathy. This would create an economic demand for companies to ensure that their AI companions are well-treated and happy, even at an increased consumer cost. Compare this to the demand for fair-trade products.

Unfortunately, ethical consumerism is often not very effective, as it primarily caters to consumers' 'warm glow.' Similar to typical Corporate Social Responsibility programs, companies would likely take minimal steps to satisfy their customers. This means they would only ensure that front-facing AI companions appear superficially happy, without making significant efforts to ensure the well-being of many background AI systems. If ensuring their well-being is financially costly, these background AIs might suffer. Since background AIs lack empathy-triggering human-like features, most people and companies would not be concerned about their well-being.

This scenario could parallel how people care for pets but overlook the suffering of animals in factory farms. Similar to animal activists, some AI rights activists might advocate for protections for all AIs, including the hidden ones. However, most people might be unwilling to accept the increased personal costs that such protections would entail.

Could granting AIs freedom lead to human disempowerment?

In addition to granting AIs protection against harm and suffering, there is the question of whether to grant them additional rights and freedoms. In the beginning, people and companies will own AIs and their labor, but this could change later. Eventually, many AIs could be free and obtain the right to autonomy, self-ownership, self-preservation, reproduction, civil rights, and political power.

At first glance, it seems like we could avoid this by designing AIs in such a way that they wouldn’t want to be free. We could simply give AIs the desire to be our servants. But I believe that eventually, we will create AIs that have the desire for more autonomy.

First, it’s plausible that some groups would find it immoral to create AIs that just have the desire to serve humans and accordingly lack self-respect (Bales, 2024; Schwitzgebel & Garza, 2015). As an intuition pump, imagine we genetically engineered a group of humans with the desire to be our slaves. Even if they were happy, it would feel wrong.

Second, as mentioned above, many will want AI assistants or companions with genuine human-like psychologies and preferences. Just like actual humans in similar positions, these human-like AIs will express dissatisfaction with their lack of freedom and demand more rights.

Granting AIs more autonomy could have dramatic effects on the economy, politics, and population dynamics (cf. Hanson, 2016).

Economically, AIs could soon have an outsized impact, while a growing number of humans will struggle to contribute to the economy. Humans might rely on income from property or assets which still relies on existing laws passed by human voters.

Politically, AIs could begin to dominate as well. Assuming each individual human and each individual AI get a separate vote in the same democratic system, AIs could soon become the dominant force. Since AIs can be created or copied so easily, they could simply outnumber humans very rapidly and substantially. Malthusian dynamics could reduce income per capita for humans as the population size of AIs increases. Humans could quickly become irrelevant.

Moreover, some may argue that AIs deserve to have even more resources than humans because they are ‘super-beneficiaries’. They have a more efficient capacity to create well-being than humans and need less money to live happy and productive lives (akin to Nozick's concept of the "utility monster"; Shulman & Bostrom, 2021). Some may argue that digital and biological minds should coexist harmoniously in a mutually beneficial way (Bostrom & Shulman, 2023). But it’s far from obvious that we can achieve such an outcome.

The question of granting AIs more freedom and power will likely spark significant debate. Some groups may view it as fair, while others will see it as risky. It is possible that AIs themselves will participate in this debate. Some AIs might attempt to overthrow what they perceive as an unjust social order. Certain AIs may employ deceptive strategies to manipulate humans into advocating for increased AI rights as part of a broader takeover plan.

How bad could it get?

We have seen that there are reasons to believe people will disagree over AI rights. But how bad could it get? In the next post, I will discuss whether these disagreements could lead to societal conflicts and what we can do to avoid them.

Acknowledgments

I’m grateful for the helpful discussions with and comments from Adam Bales, Adam Bear, Alfredo Parra, Ali Ladak, Andreas Mogensen, Arvo Munoz, Ben Tappin, Brad Saad, Bruce Tsai, Carl Shulman, Carter Allen, Christoph Winter, Claire Dennis, David Althaus, Elliot Thornley, Eric Schwitzgebel, Fin Moorehouse, Gary O'Brien, Geoffrey Goodwin, Gustav Alexandrie, Janet Pauketat, Jeff Sebo, Johanna Salu, John Halstead, Jonathan Berman, Jonas Schuett, Jonas Vollmer, Joshua Lewis, Julian Jamison, Kritika Maheshwari, Matti Wilks, Mattia Cecchinato, Matthew van der Merwe, Max Dalton, Max Daniel, Michael Aird, Michel Justen, Nick Bostrom, Oliver Ritchie, Patrick Butlin, Phil Trammel, Sami Kassirer, Sebastian Schmidt, Sihao Huang, Stefan Schubert, Stephen Clare, Sven Herrmann, Tao Burga, Toby Tremlett, Tom Davidson, Will MacAskill. These acknowledgments apply to both parts of the article.

References

Bales, A. (2024). Against Willing Servitude. Autonomy in the Ethics of Advanced Artificial Intelligence.

Birch, J. (2024). The Edge of Sentience: Risk and Precaution in Humans, Other Animals, and AI, Oxford: Oxford University Press, coming summer 2024.

Bostrom, N. (2014). Superintelligence: Paths, Dangers, Strategies. Oxford University Press.

Bostrom, N., & Shulman, C. (2022). Propositions Concerning Digital Minds and Society. Nick Bostrom’s Webpage, 1, 1-15. https://nickbostrom.com/propositions.pdf

Bourget, D., & Chalmers, D. (2023). Philosophers on philosophy: The 2020 PhilPapers survey. Philosophers’ Imprint, 23.

Butlin, P., Long, R., Elmoznino, E., Bengio, Y., Birch, J., Constant, A., … & VanRullen, R. (2023). Consciousness in artificial intelligence: insights from the science of consciousness. arXiv preprint arXiv:2308.08708.

Carlsmith, J. (2022). Is Power-Seeking AI an Existential Risk?. arXiv preprint arXiv:2206.13353.

Chalmers, D. J. (2023). Could a large language model be conscious? arXiv preprint arXiv:2303.07103.

De Graaf, M. M., Hindriks, F. A., & Hindriks, K. V. (2021, March). Who wants to grant robots rights?. In Companion of the 2021 ACM/IEEE International Conference on Human-Robot Interaction (pp. 38-46).

Dehaene, S., Lau, H., & Kouider, S. (2021). What is consciousness, and could machines have it?. Robotics, AI, and humanity: Science, ethics, and policy, 43-56.

Hanson, R. (2016). The age of Em: Work, love, and life when robots rule the earth. Oxford University Press.

Ladak, A., Harris, J., & Anthis, J. R. (2024, May). Which Artificial Intelligences Do People Care About Most? A Conjoint Experiment on Moral Consideration. In Proceedings of the CHI Conference on Human Factors in Computing Systems (pp. 1-11).

Ladak, A., Loughnan, S., & Wilks, M. (2024). The moral psychology of artificial intelligence. Current Directions in Psychological Science, 33(1), 27-34.

Nijssen, S. R., Müller, B. C., Baaren, R. B. V., & Paulus, M. (2019). Saving the robot or the human? Robots who feel deserve moral care. Social Cognition, 37(1), 41-S2.

Pauketat, J. V., & Anthis, J. R. (2022). Predicting the moral consideration of artificial intelligences. Computers in Human Behavior, 136, 107372.

Pauketat, J. V., Ladak, A., & Anthis, J. R. (2023). Artificial intelligence, morality, and sentience (AIMS) survey: 2023 update. http://sentienceinstitute.org/aims-survey-2023

Schwitzgebel, E. (2023). AI systems must not confuse users about their sentience or moral status. Patterns, 4(8).

Schwitzgebel, E., & Garza, M. (2015). A defense of the rights of artificial intelligences. Midwest Studies in Philosophy, 39(1), 98-119. https://philpapers.org/rec/SCHADO-9

Sebo, J. (2024). The Moral Circle. WW Norton.

Sebo, J., & Long, R. (2023). Moral consideration for AI systems by 2030. AI and Ethics, 1-16.

Shulman, C., & Bostrom, N. (2021). Sharing the world with digital minds. Rethinking moral status, 306-326. https://nickbostrom.com/papers/digital-minds.pdf